The theory of threading

Implementing threading is not an easy thing to do, by any means. The nature of a game is that it really wants to have 100% CPU requirement. As Tom Leonard, Valve's multi-threading guru points out, "If you're not at 100%, you're doing a disservice to the game."Threading is about making things work in parallel - in terms of Kentsfield, making four cores work together at the same time. However, games are by their very nature serial - each system is dependent on the output of the previous one. Valve has decades of man experience in single threaded optimisation, including plenty of "novel techniques". This meant that converting millions of lines of code to run in parallel, rather than serial, was what Tom calls an "Interesting challenge"!

Thread types

There are, broadly, four types of threading.Single threading: is relatively simple. "It's attractive to everyone, because contemporary CPUs perform so well, and so there are plenty of companies who are happy staying single threaded. In the long term, however, it's obviously obsolete," says Tom.

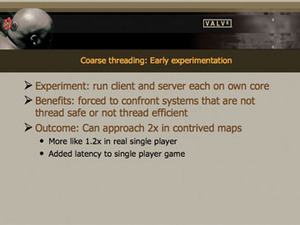

Coarse threading: is the process of taking whole systems and putting them on to individual cores. It's a conceptually simple task to put rendering on one core, AI on another, physics on another and so on. Tom continues, "This essentially allows these systems to stay single threaded, and they only have to be multithreaded when it comes to synchronising them to a timeline thread to put them all back in order."

For hardware enthusiasts, it's also a concept that's easy to work out - offloading subsystems to different components is old news, given that GPUs have been offloading rendering from the CPU for years now, and the concept of offloading physics is also well established.

Valve experimented with coarse threading "Even though we knew this was not really the direction we wanted to go. But we decided it would be an interesting experiment. It forced us to peer into the parts of our technology that were not really thread safe or thread efficient."

And, in some cases, performance could get worse. "In order to get the single player game to work with this model, we had to re-enable networking code - which brought in a multiplayer-level lag to single player gameplay."

The killer for coarse threading is that once the number of cores exceeds the number of systems you have, you obviously have completely idle cores - not very future proof.

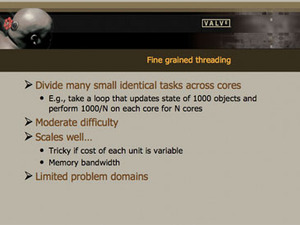

Fine-grain threading: takes a more intricate approach. The idea behind this method of threading is to take many similar or identical tasks and spread them across cores - for example, taking a loop that iterates over an array of data. In the case of a 1000 loop process, you just split it up by the number of cores. You need to make sure that each of the operations is independent and doesn't affect another data set - but this is tricky to do, because the time per work unit can be variable and managing the timeline of outcomes and consequences can get difficult.

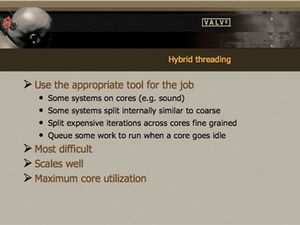

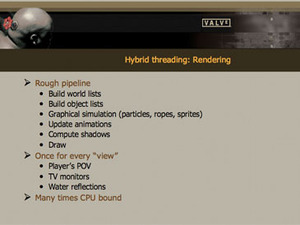

Hybrid threading: is the approach that Valve eventually decided to take. It is, you'll be unsurprised to learn, a mixture of coarse and fine threading. "It's attempting to use the appropriate tool for the job in multiple combinations," according to Tom. "So, some systems operate really well just being parked on a core - an example is sound mixing. It doesn't really interact, doesn't really have a frame constraint, it works on its own set of data, and so it's really happy being pushed off."

But most systems aren't like that. "We have identified those systems that can be split internally in a coarse or fine grained fashion and we then work to get them onto cores in the most appropriate ways possible."

This is the most difficult way to thread an application - there needs to be a lot of thought into how and where you do it. It is also very challenging to debug and analyse. However, it has the advantage of scaling well beyond four, eight or even sixteen cores - essential in the multi-core future we're going to find ourselves in very soon.

Tools

To do this, Valve created its own set of tools. Whilst it evaluated using existing programmes - such as operating system-level threading engines, or open source threading engines - it found that these were inefficient when it came to games."We gravitated towards creating a custom work management system that's designed specifically for gaming problems - its job is to keep the cores 100% utilised. We make it so that there are N-1 threads for N cores, with the other thread being the master synchronisation thread. The system allows the main thread to throw off work to as many cores as there might be available to run on, and it also allows itself to be subjugated to other threads if needs be."

The engine uses lock-free algorithms to solve one of the major problems of threading - access to data. Many of us have come across a similar problem before - if you're trying to edit a database or a web page whilst someone else is already doing a similar thing, you end up with one person overwriting the other person's data. The problem is the same when it comes to multiple threads accessing one data set in-game.

"We realised that, 95% of the time, you're trying to read from a data set, and only 5% of the time are you actually trying to write to it, to modify it. So, we just allowed all the threads to read any time they want, and only write when the data was locked to that thread."

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.